Designing an AI chat to give instant feedback on people's videos.

I led the design of an AI-powered feedback experience that increased user's confidence and reduced support tickets.

Ai & LLM

iOS native

End to end

Date

Q1 2025

Role

Product Designer

Team

1 designer ⋅ 3 engineers ⋅ 1 PM

Video of the final prototype using a conversational chat

82%

adoption rate within the first month

25%

Reduction in support tickets

Overview

Company

allUP is a professional network for people who are "over networking".

Profiles are built with video testimonials from users and their network.

My role

As the Product Designer, I led this net-new initiative end-to-end.

I owned:

Problem framing

Competitive & user research

Concept exploration & prototyping

UX/UI, interaction design, and user flows

Cross-functional collaboration with Engineering + PM

Launch quality, trade-off decisions, & post-launch learnings

The situation

allUP was growing quickly after expanded into hiring

In 2025, allUP expanded into hiring. This enabled candidates and managers to record videos about themselves to find the perfect fit.

This shift unlocked massive growth for the company, accelerating adoption across both sides of the marketplace. However, as the platform grew and more people recorded videos, we encountered a problem...

The problem

People didn’t feel confident recording

We received 100s of daily requests for help. This included:

Support tickets asking “How was my answer?”

Emails requesting “Can someone review my recording?”

Comments from user expressing anxiety about their videos

At the outset, we personally reviewed videos to help people improve.

This quickly become unscalable as 1,000s of videos were recorded daily.

We needed a way to build user’s confidence without adding overhead.

key question

How might we help people feel confident recording videos?

I created this problem statement to align the team on our goal.

We needed to understand:

How can we ensure people feel confident to record?

How do we give people coaching at scale?

How do we make feedback feel human, not clinical or robotic?

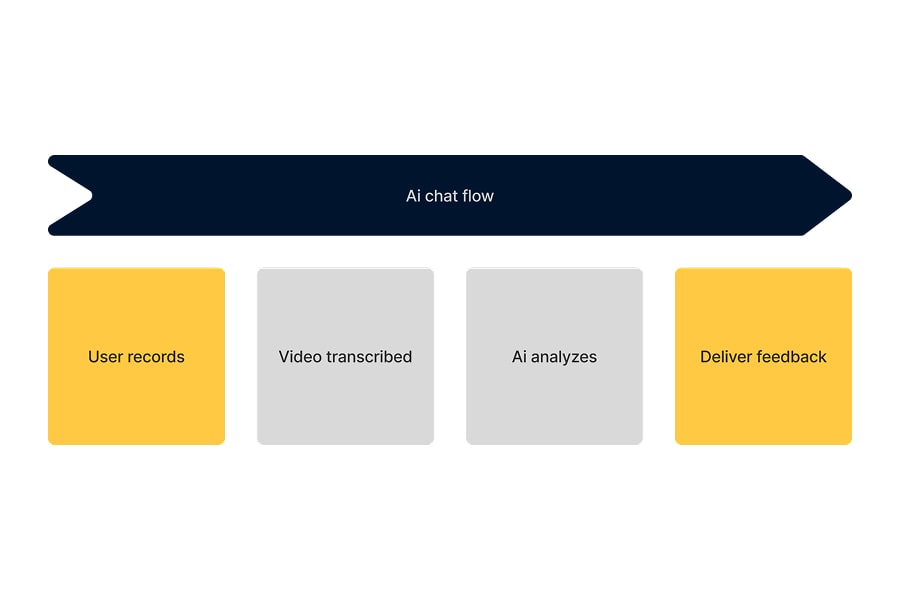

We used allUP’s AI to deliver personalized, uplifting feedback

allUP had been investing heavily in a tailored AI model built for providing feedback. We made a bet to leverage this engine to build user's confidence.

Simplified flow of how the Ai would provide feedback

In the midst of ambiguity,

I clarified our strategy

Before exploring Ui, I aligned the team around several key questions:

How do we deliver critique without hurting confidence?

What format feels human and digestible?

How do we establish trust given AI’s unpredictability?

These questions shaped the Ui and UX.

User quotes from support tickets & conversations

I found out why users wanted feedback

Before jumping too deep, I wanted to validate assumptions we had and ensure we solved the right problems. I read through support conversations, support tickets and user interviews recordings.

Here’s what I found

People didn’t know what “good” looked like.

Feedback needed to feel supportive, not judgmental.

Most people felt uncomfortable watching their own recordings.

People needed actionable tips they could implement immediately.

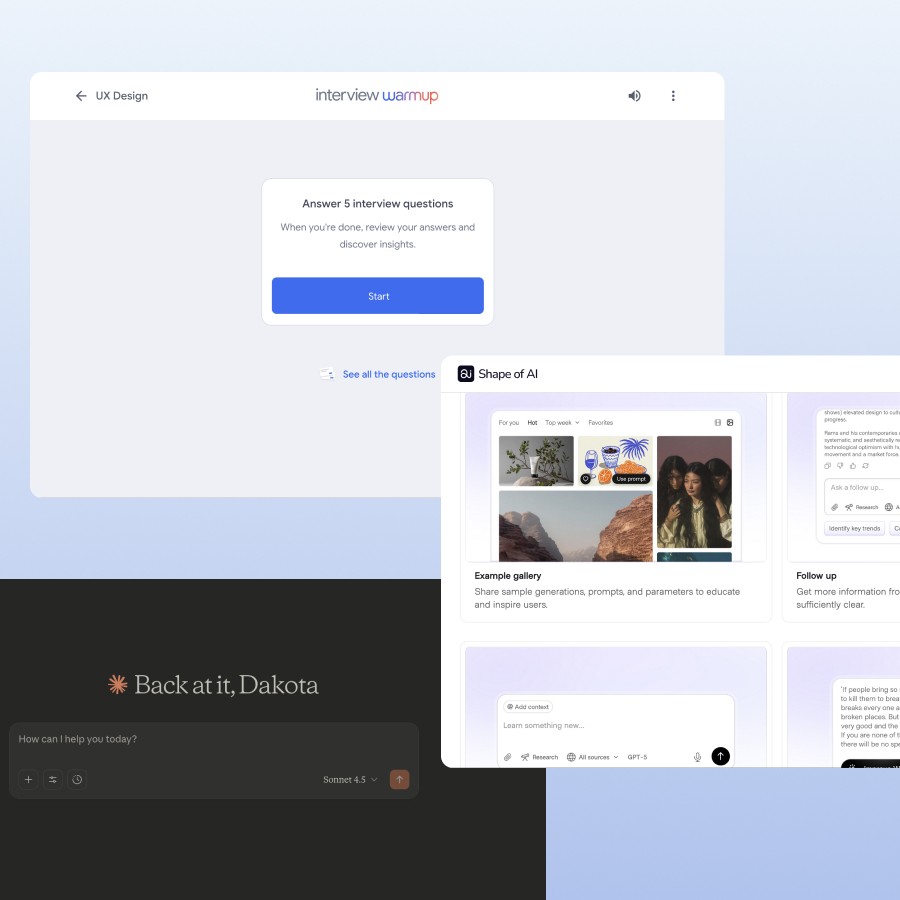

Screenshots of researched sites: Google interview prep, Claude, GPT, and Shape of AI, and more

I led competitive & pattern research to inform my design decisions

AI is still a new paradigm, and design patterns were (and still are) emerging.

To inform my decisions, I researched a few different types of tools.

This included:

AI interview prep tools

Conversational AI assistants

Coaching and assessment platforms

Various frameworks to provide of feedback

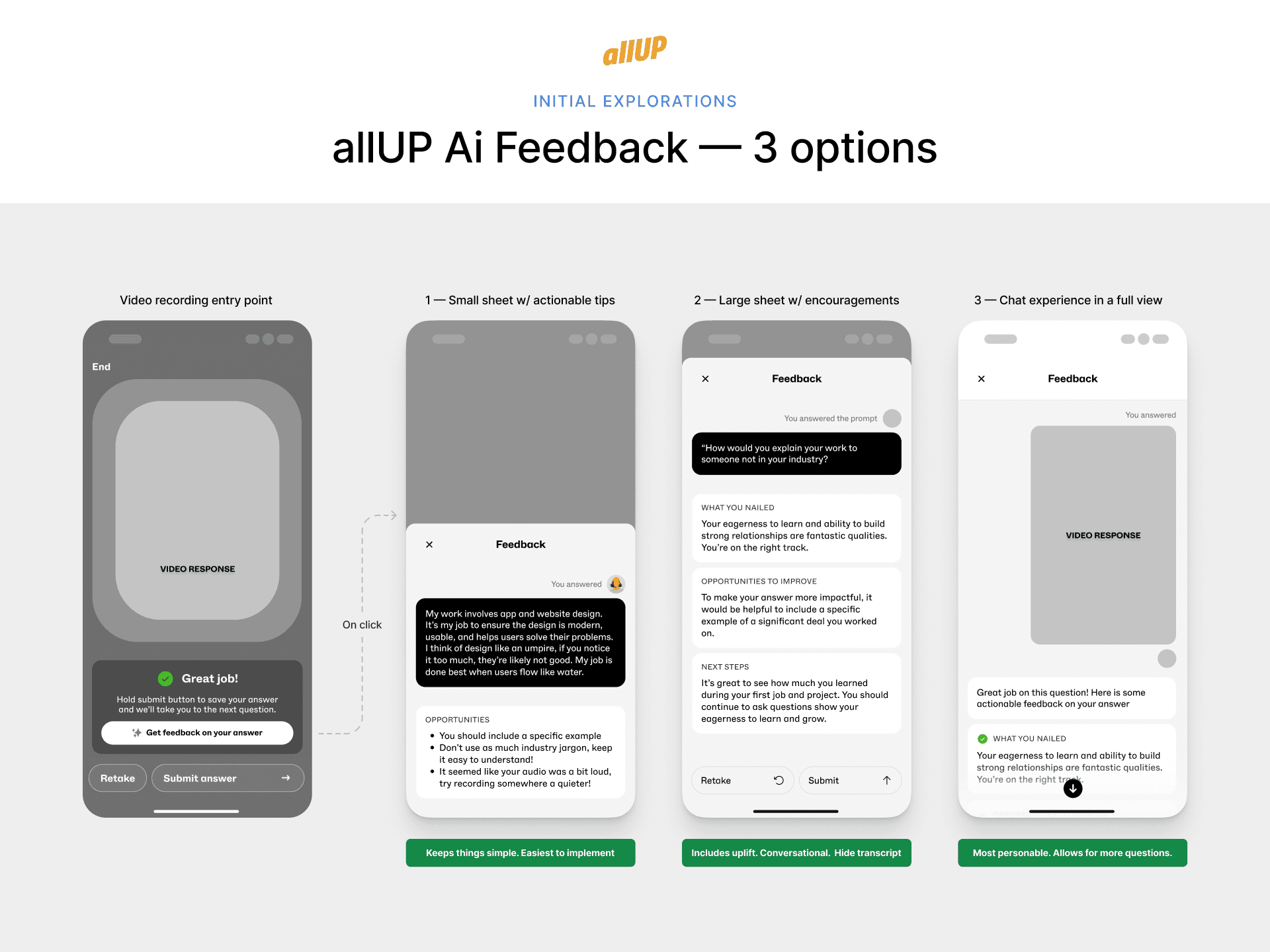

3 proposed directions for showing users feedback

I explored a breadth of directions

This helped me think broadly before committing to a decision.

Here’s a few directions I explored:

Small tray with actionable tips

Tall tray with more detailed feedback

Conversational chat experience for contextual follow-up

The goal was to map solution paths to make an informed decision.

I presented these explorations to our leadership team

After exploring a breadth of options, I created a presentation and shared this work to our team. This including our co-founders, engineers, and product managers.

I explained how the different directions we could go, pros and cons of each path, and how these would tie into user and business goals.

Video of the final prototype using a conversational chat

I advocated for building a conversational experience

During discussions on which direction to pursue, I advocated for using a conversational experience. This model emerged as the strongest direction because it provided:

A more human, empathetic feel

A natural way to deliver layered feedback

Ability for people to follow up and ask questions

A foundation for future tools allUP intended to build

The team agreed and we made this our north star direction

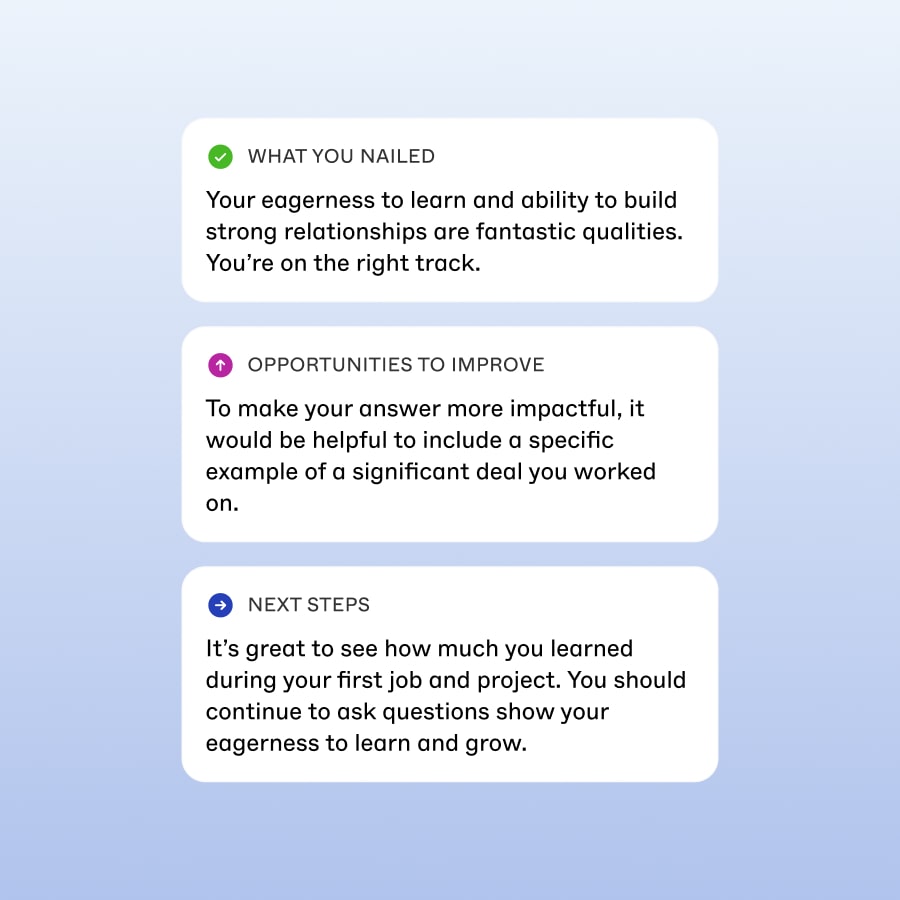

Compliment sandwich format I designed to show feedback

I designed the format of feedback to encourage and uplift people

Many of our users felt uncertain and even discouraged during their job hunt.

I proposed using a “compliment sandwich” to show the feedback.

This meant we would:

Start with praise to build trust and momentum.

Provide a clear piece of constructive feedback.

End with encouragement and actionable next steps.

This kept the experience honest, encouraging, and actionable

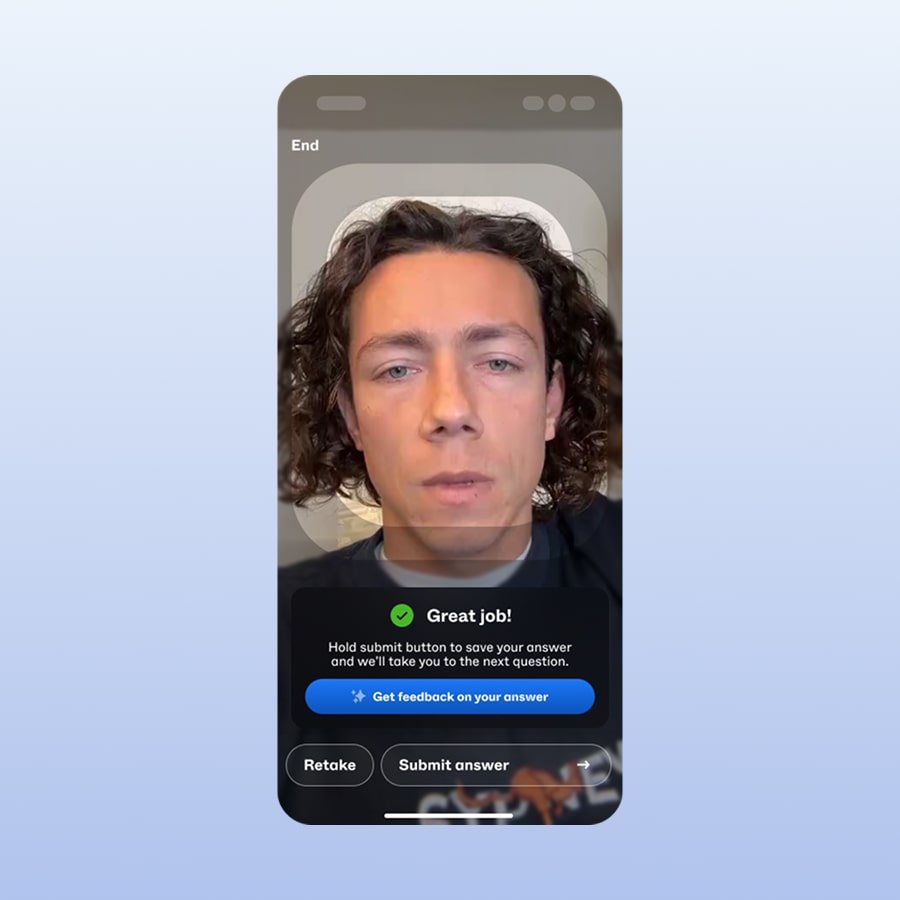

I leveraged a curiosity gap to increase engagement

After a user finished recorded, I introduced a CTA saying “See how well you did”

This subtle curiosity gap significantly increased engagement while remaining optional and non-intrusive. Users who wanted coaching clicked, and users who felt done could move on.

This improved activation without forcing friction.

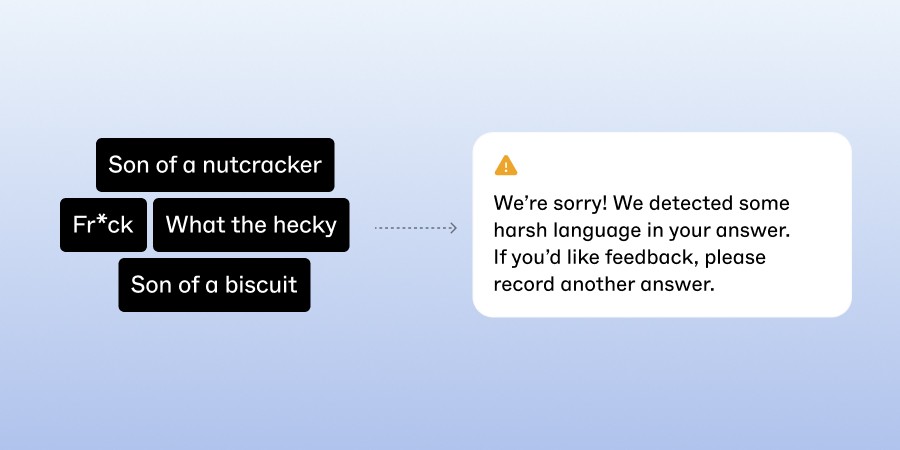

I designed for edge cases and errors

Building with AI, a lot that can go right... but also go wrong.

I worked closely with our PM and ENG to figure out what edge cases could occur and how we could build trust if things fail. Here are 4 safeguards I designed:

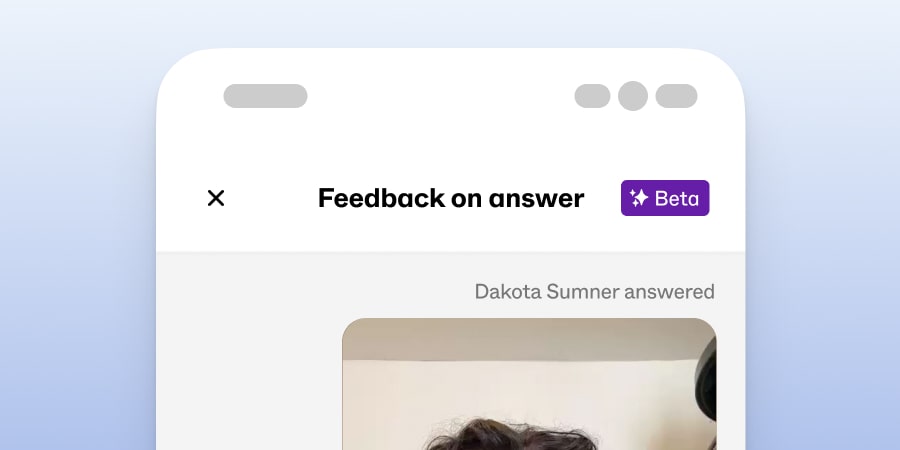

A “Beta” badge

This made it clear that this is a new feature we're testing.

Clear and specific error microcopy

Instead of generic copy, I wrote micro copy specifically for each error. This included Network issues, Audio too quiet, Answer too short, Hard to detect meaning, etc.

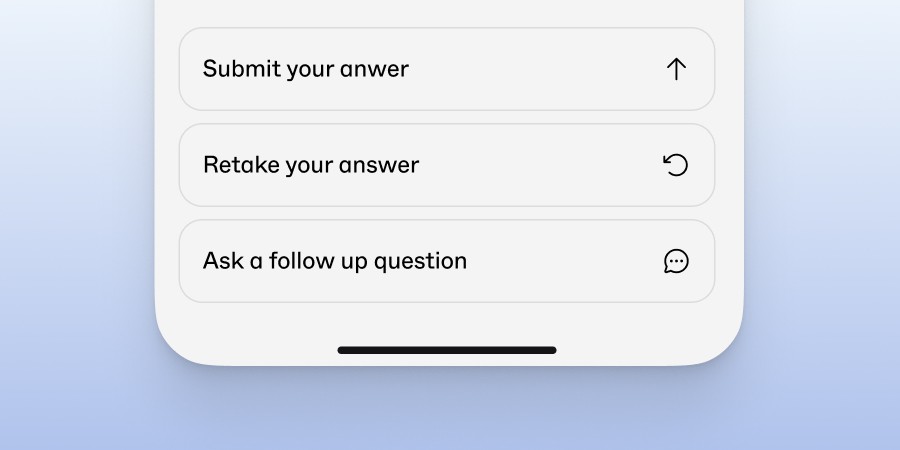

Clear exit paths for users

Users always had the option to retake the video or skip the feedback entirely, preventing them from feeling blocked or stuck.

Backend filters for inappropriate transcripts

If transcripts contained inappropriate, ambiguous, or harmful language, the system prevented feedback and surfaced a neutral message explaining why.

I made tradeoffs to ship a simplified version under real startup constraints

Like many projects, things don't always go exactly as planned :)

As we neared development, we faced feasibility limits. This included time, model inconsistencies, and engineering capacity. All things that were out of my control. This meant the our North Star, the conversational prototype, wasn’t able to be built in time.

To ensure we met deadlines and launched, I simplified the current prototype:

Reduced design to a single screen

Removed back and forth chatting

Prioritized fast load times and reliability

Preserved the emotional tone and structure

The result was a leaner version that still delivered the core value

Simplified version of Ai feedback with removed chatting functionality

The impact was undeniable

Quant data sourced 1 month after from in-flow surveys, user interviews, and support conversations.

Qualitatively:

Users reported feeling “more confident”

Many said the feedback helped them “redo the video with clarity”

Support tickets asking for human review dropped significantly

This initiative improved user activation and validated the need for a scalable coaching layer.

Post launch, I continued refining the chat experience to build in the future

Though we shipped a simplified version, the long term goal remained the conversational model. These chat prototypes I built help to shape allUP's long term roadmap.

Learnings & takeaways

Explore wide, then go deep

The best ideas emerge when constraints aren’t prematurely applied

Prototype the path

Providing clickable artifacts makes solutions feel so real. Aim to make them

Treat solutions like sandcastles

You must be willing to compromise to ship. Care for your ideas well, but be willing to let them go as the tide rises.